We’ve recently significantly increased the possible throughput of API requests to Agilebase, for customers hosted on isolated environments (see below), available to all customers on the Enterprise plan.

Firstly, a quick recap of what an API is. It’s simply a mechanism for the outside world to take either data out of Agilebase or put it back in. That could be a website, online ordering system, a phone app or any third party software which communicates with Agilebase, like e.g. an accounting package.

Most customers use relatively small amounts of API requests for standard transactions, e.g. sending invoices to an accounts package once a day, but some can use multiple requests per second and many GB of data per day.

Agilebase can now cope with very many API requests at once, which might occur in something like a booking system for popular events, or just the myriad of API requests which occur in complex systems when running the operations for a large organisation.

A short and easy to understand technical note should explain how this is possible.

Isolated and shared environments

Agilebase originally operated purely with a ‘shared environment’. All customers shared the same computing resources, i.e. processors, memory, databases etc. In order to ensure fairness, an API queueing system was used. Each customer had their own queue for API requests. If two requests came in at roughly the same time, the second one would wait in the queue until the first had finished processing. That’s like for example queuing up to get an author to sign a book.

Watty62, CC BY-SA 4.0 <https://creativecommons.org/licenses/by-sa/4.0>, via Wikimedia Commons

The reason for the one API queue per customer was to ensure that we could properly estimate and control the computing services necessary to serve all customers and maintain high performance.

In fact that’s still the way the system operates for many. However there’s also the option which larger customers tend to take up on the Enterprise plan, of using an isolated environment. There are many advantages, including increased performance, enhanced monitoring, control over new version releases and a testing infrastructure to go with it.

There’s now an additional advantage. Rather than the single API queue, a customer can have multiple slots. That’s more like queuing up to pay for shopping at the supermarket, where there are multiple tills to handle a high number of people.

Coulsdon: Waitrose supermarket by Dr Neil Clifton, CC BY-SA 2.0 <https://creativecommons.org/licenses/by-sa/2.0>, via Wikimedia Commons

As a customer, you can in fact set as many ’tills’, or slots as you like. So if you have 100 slots and 100 API requests come in within a few milliseconds of each other, they will all start to process and none will get held up. If more than that arrive, they will only then start to be queued and you can also set the maximum time that a request can be queued for before being cancelled.

Monitoring

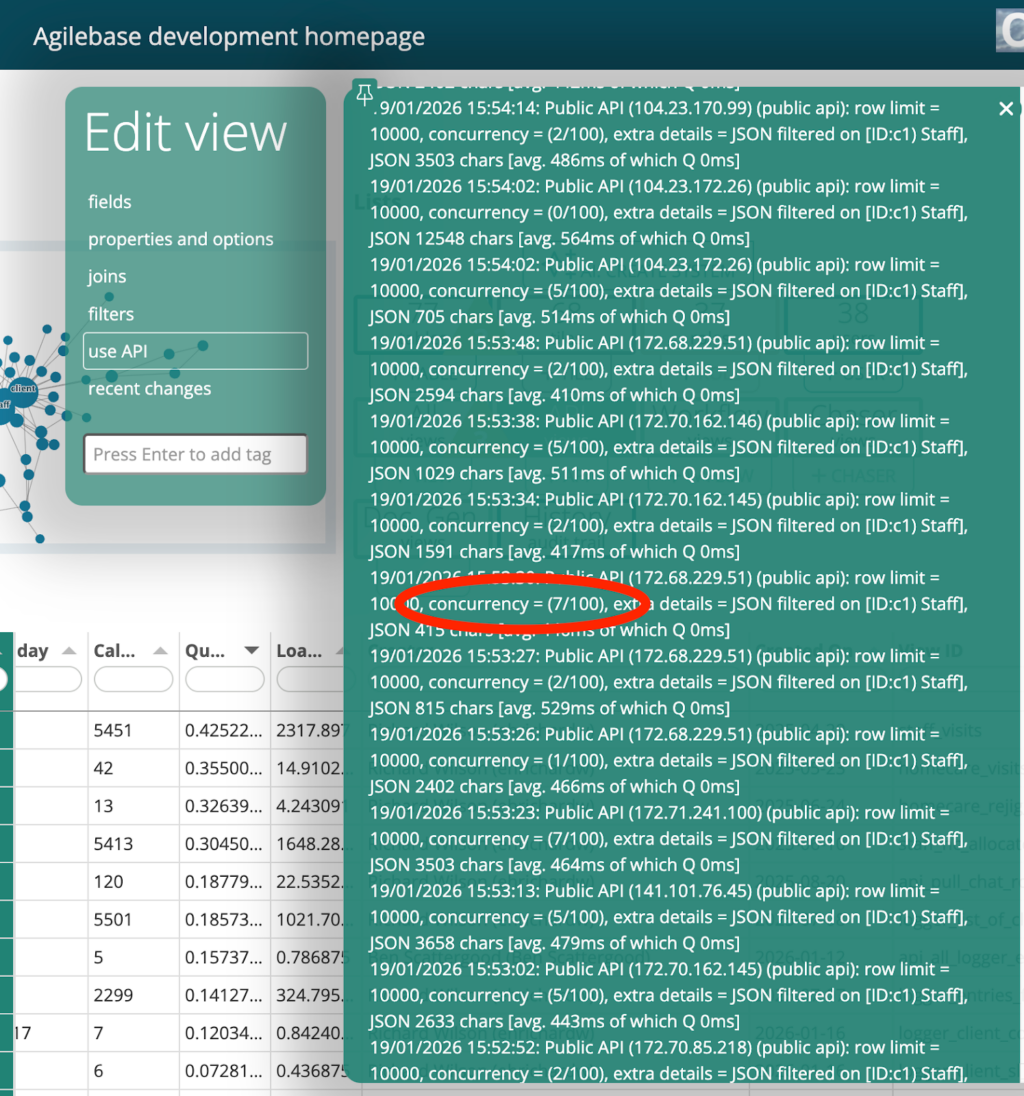

You can see the utilisation of the capacity you’ve set in the API panel for a view. There’s a new bit of data shown, concurrency, which shows the number of simultaneous requests being processed at the time of each individual request.

The new system doesn’t magically give you unlimited performance, you’re still limited by the underlying processing power of the database etc., but it does mean that you aren’t artificially limited.

So if anyone is expecting or planning for an API which is going to get lots of traffic, please speak to us and we will make sure that the resources will be there to handle it.